Dynamic Allocation of Autonomy

As robotics technology becomes more ubiquitous, we increasingly will see instances of robot operation that shares control with human users and task partners. Our premise is that to reason about the quality of both the information signals from the human as well as those from the autonomy is fundamental to robotic systems operating in collaboration with, in close proximity to, sharing control with or assisting humans.

For human-robot teams operating with limited communication bandwidth, perhaps separated by physical distance and in adversarial environments, the information shared live between teammates will likely be sparse, intermittent or inconsistent. Under such circumstances, it is crucial not only that the human understand the robot autonomy in order to provide sound control input or guidance, but likewise that the automated system understand the quality limitations on the information provided by the human.

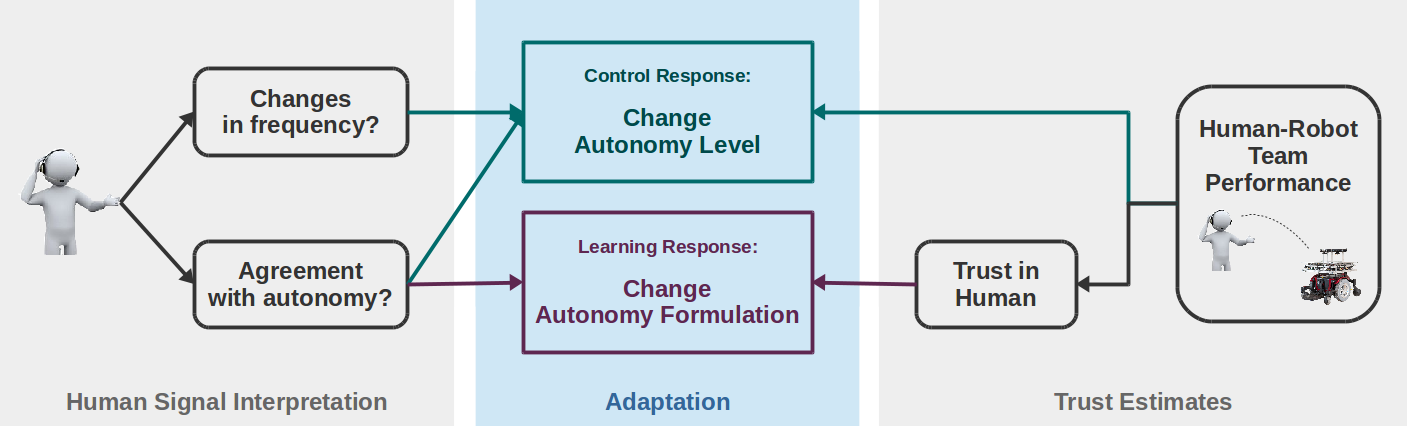

This project introduces a framework for the dynamic allocation of control between human and robot teammates, that is appropriate for use under the constraints of limited communication bandwidth and changing capabilities of the human-robot team. The allocation of autonomy is dynamic, as is the interaction between and roles of the teammates. To inform this framework, an initial pilot study explicitly modulated various degradations of the communication channel and predicts when and what type of degradation is occurring.

To more rigorously quantify task difficulty (for use as a trigger to switch autonomy levels), we developed a formalized approach to task characterization for human-robot teams using Taguchi design of experiments and conjoint analysis]. We found rotational features of a task contributed significantly more to decreased performance and increased difficulty than translational features, and that rotational features and features leading to kinematic singularities were the most useful for triggering assistance from the autonomy. To more rigorously characterize the control interface in use by the human operator, we developed an open-source interface assessment package, a first of its kind for assistive robotics. Our study found statistically significant differences across multiple metrics when the same human was performing the same task but with a different interface, and most notably differences in signal settling time. A more intelligent and interface-aware interpretation of the human’s control signal is likely to improve human-robot team performance.

We also have evaluated various formulations for control sharing at a single autonomy level and found a difference in preference across users that moreover can be predicted with reasonably good accuracy, suggesting that dynamic allocation perhaps also should occur within a given autonomy level. As for intent prediction, our work has found that the mechanism used to estimate the human’s intent can impact when an autonomy level shift is triggered, and is less accurate when inferred from information transmitted over more limited communication channels.

Funding Source: Office of Naval Research (ONR N00014-16-1-2247)