Robot Machine Learning for Human Motor Learning

As assistive machines offer greater functionality, they often also become more complex to control. To operate such machines requires interfaces able to issue these complex signals, and the commercial interfaces which are available to those with severe motor impairments fall short, leaving those who arguably would benefit most from the assistive machine without access.

SRAlab’s novel Body Machine Interface (BMI) has the potential to provide complex, high-dimensional control signals, by turning the body into a joysick (with the added benefit of promoting motor rehabilitation). The question we aim to answer in this project is whether this BMI interface indeed can be used to control a complex assistive robot.

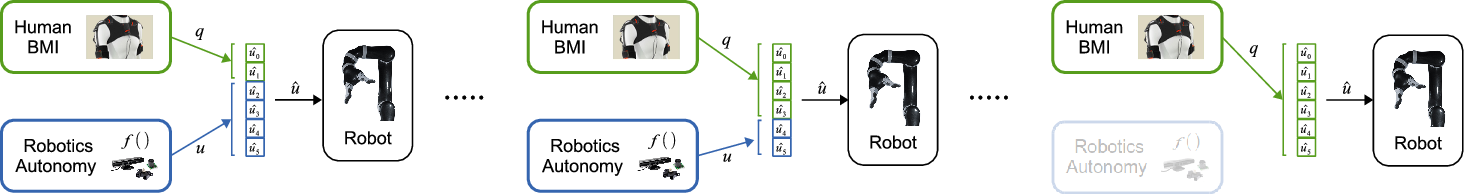

Our proposed solution is to leverage robotics autonomy to handle a portion of the control, and gradually transfer more and more control to the human operator. To train the operator to generate higher and higher dimensional control signals, we additionally leverage machine learning in deciding when to unlock additional control dimensions for human operation.

In collaboration with Ferdinando Mussa-Ivaldi, Biomedical Engineering, Northwestern University and the Shirley Ryan AbilityLab.

Funding Source: National Institutes of Health (R01-EB024058)